Linear Models

import numpy as np

import matplotlib.pyplot as plt

from mpl_toolkits import mplot3d

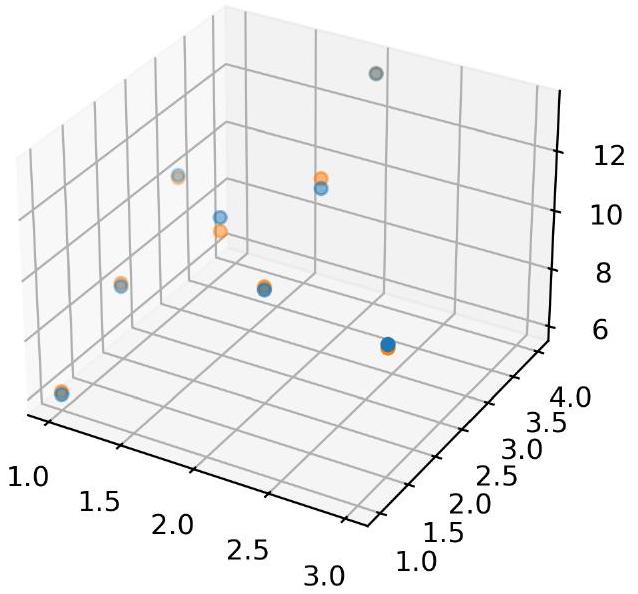

# Create data

X_list = [[1.,1.],[1.,2.],[2.,2.],[2.,3.],[1.5,2.5],[2.,4.],[1.,3.],[3.,1.5]] # Create unlabeled

data point/instance using a Python list

X = np.array(X_list) # Turn instance into a NumPy array

Proj = np.array([1,2]) # Create perfect labels

y = np.dot(X,Proj)+3 # Add intercept

y = y + np.random.rand(y.size) # Add noise to mimic experimental measurements

# Define a function to perform analytical solution to linear model problems

def LinearRegression(X,y):

X_bar = np.hstack((X,np.ones([X.shape[0],1]))) # I = 8 by J+1 = 3

W_hat = np.linalg.inv(X_bar.T @ X_bar) @ X_bar.T @ y # J+1 = 3 by 1

return W_hat, X_bar

# Symbols are the same as those in the textbook

W_hat, X_bar= LinearRegression(X,y)

W = W_hat [:-2]

b = W_hat[-2:]

# Solution

Y_fit = np.dot(X_bar,W_hat)

# Plot and compare results

fig = plt.figure()

ax = plt.axes(projection='3d')

ax.scatter(X[:,0],X[:, 1],y,'b*')

ax.scatter(X[:,0],X[:,1],Y_fit,'rd')